Everything About The Indexability of Your Website

We should understand that only the indexed pages can show up on the search result page. Thus, it is important to make sure that your page is indexable. In this short article, we will dive deep into the indexability of a website.

What Is Website Indexability?

Indexability refers to the ability of a web page to be indexed by search engines and included in their databases. In the context of search engine optimization (SEO), the process of indexability is crucial because it determines whether a particular webpage can be found and displayed in search engine results pages (SERPs).

When a search engine's crawler (also known as a bot or spider) visits a webpage, it analyzes the page's content and structure to determine if it should be included in the search engine's index.

The index is essentially a massive database that contains information about web pages and their content. Only then it can be shown in the search result.

Key factors influencing indexability include

There are some factors that could influence the indexability of a web page. These factors are often looked at when there is an issue with the website's indexability. Below is the list of key factors that could determine the page indexability.

1. Robots.txt file

The robots.txt file is a text file placed in the root directory of a website, and it provides instructions to web crawlers regarding which pages or sections of the site should not be crawled or indexed.

2. Meta Robots Tag

Webmasters can use the meta robots tag within HTML to provide crawler instructions on a specific page-by-page basis. For example, a page may include a meta tag that tells search engines not to index the content.

3. Canonicalization

Canonical tags are used to indicate the preferred version of a webpage when multiple versions with similar content exist. This helps prevent duplicate content issues and ensures that search engines index the preferred version.

4. Noindex Tag

Webmasters can use the no index meta tag to explicitly instruct search engines not to index a specific page. This is useful for content that is not intended to be shown in search results.

5. Crawl Errors

If a webpage has crawl errors, such as server errors, redirects, or other issues, it may hinder the indexability of the page. Regularly monitoring and fixing crawl errors is essential for ensuring indexability.

6. Content Quality and Relevance

The content on a webpage should be of high quality, relevant, and unique. Search engines prioritize pages with valuable content, and such pages are more likely to be indexed.

7. XML Sitemaps

Providing an XML sitemap helps search engines understand the structure of a website and ensures that all relevant pages are discovered during the crawling process. It is a list of URLs that the website owner wants search engines to crawl and index.

8. Accessibility

If a webpage requires user authentication, has overly complex navigation, or uses technologies that search engines cannot crawl, it may not be indexed.

Ensuring that web pages are indexable is a fundamental aspect of SEO. It determines whether a webpage can be considered for inclusion in search engine results, and it directly impacts a website's visibility and organic traffic.

SEO Best Practices to Ensure Indexability

As we said before, indexability influences the website's performance in the search results. Thus, you need SEO indexability best practice to ensure all the website element is indexable. Take a look at these best practices you can implement:

1. Site Audit

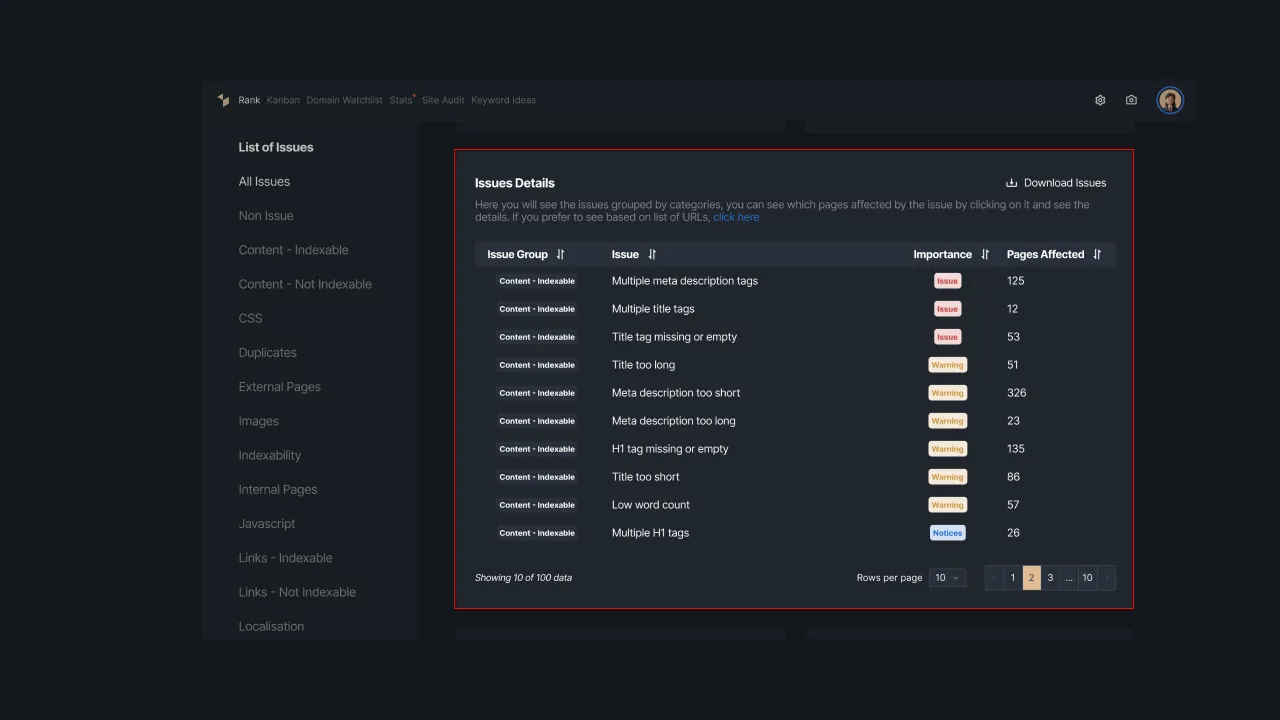

First of all, do a site audit of your site to see whether there is an issue regarding the site indexability. You can use the Sequence Stats Site Audit feature to give you a detailed result of the site audit. Then, open the result about indexability. Here’s what it looks like

|

|---|

| Picture 1: Indexability report in Site Audit |

2. Create an XML Sitemap

Generate and submit an XML sitemap to search engines. This file provides a roadmap of your website's structure and helps search engines discover and index your pages more efficiently.

3. Optimize Robots.txt File

Review and update your robots.txt file to ensure that it doesn't block search engine crawlers from accessing important pages on your site. Use the file to specify which areas should be crawled and which should be excluded.

4. Ensure Proper Redirects

When you need to redirect URLs, use proper redirect methods (e.g., 301 redirects for permanent changes). This helps search engines understand the correct page to index.

5. Check for Noindex Tags

Periodically review your website's pages to ensure that unintentional noindex tags are not present. These tags can prevent pages from being indexed.

6. Monitor JavaScript Rendering

Ensure that important content is not hidden behind JavaScript, as search engines may have difficulty indexing dynamically loaded content. Use progressive enhancement techniques to make essential content accessible.

By implementing these SEO best practices, you can optimize the indexability of your website, improve its visibility in search results, and enhance the overall user experience.

Regularly monitoring your site's performance using Sequence Stats and staying informed about search engine updates will help you adapt to evolving best practices and maintain strong SEO.